AI against AI: The digital arms race in Cybersecurity

While you're reading this, cybercriminals are training AI models to break through your defenses. Attackers keep innovating, so security teams need to as well. In this blog, you'll discover how AI is being used by both attackers and defenders, and how you can win that battle.

Introduction

In a world where digital threats grow more sophisticated every day, artificial intelligence has become a crucial player in the battle between attackers and defenders. AI is increasingly penetrating the business world, integrated into software and processes at every level. Both offensive and defensive teams use AI to achieve their goals. In this blog, we explore how malicious actors deploy AI and how Blue Teams can use it to detect and counter these attacks.

History

At the time, he couldn't properly define what 'thinking' meant in this context or what kind of 'machines' should be used. It wasn't until the 1980s that the first systems became available for AI use. However, limited computing power and data availability kept development restricted. Real progress only started around 2010, when powerful GPUs became available that could train AI models faster. More data, both online and offline, became available as input for these models.

Since then, AI has been increasingly deployed in cybersecurity. It's primarily used to improve spam filters and detect phishing emails, but also in antivirus software to better identify unknown malware and detect statistical anomalies in network traffic.

AI usage took off with the first release of ChatGPT by OpenAI in 2022. GPT stands for generative pre-trained transformer, capable of generating text, speech, and images in response to user prompts. From that moment, the general public could experience AI firsthand, and it became rapidly integrated into countless applications.

Cybercrime in numbers

Using AI from a hacker perspective

Social media is a favorite source for malicious actors. More information is being shared on platforms like LinkedIn, Facebook, and X. By capitalizing on current organizational campaigns, analyzing language use, and gaining insight into employees, attackers feed their AI tools with information. These tools then generate phishing emails with the right tone of voice, based on current topics, appearing to come from the right colleague.

AI is also used to find vulnerabilities in email systems, helping these emails stay out of sight of mail filters. Phishing emails primarily aim to:

- Obtain user credentials

- Access non-public data

- Execute malicious applications

Once a malicious actor has a foothold within the organization, or has information about the systems in use, they can execute AI-automated vulnerability scans. These scans can be expanded so that discovered vulnerabilities are automatically exploited. Dark GPT models like WormGPT and FraudGPT can generate scripts that create exploit chains based on known vulnerabilities. Through machine learning, malicious programs adapt their behavior to their environment, avoiding detection by traditional antivirus and SIEM systems.

AI from the blue team perspective

By using AI capabilities within email systems, the system keeps learning new phishing patterns. Email service providers play an important role here. They can integrate known email characteristics into their filters, blocking them next time. By using smart vulnerability scans (vulnerability management), organizations can identify infrastructure vulnerabilities early and take action in time.

User Entity and Behavior Analytics (UEBA) is an advanced cybersecurity technique that uses machine learning and behavioral analysis to detect anomalies within digital environments. It focuses on identifying compromised entities such as firewalls, servers, and databases, but also suspicious activities from malicious insiders or compromised accounts. By linking logs and data from multiple systems, an analysis can be made of 'normal' use by an entity or user. AI is then used to analyze this usage. Deviations in behavior will generate alerts that a security team can investigate further.

A practical example from our own experience, where UEBA helped us take timely action on a compromised user account, is as follows. An account manager was regularly working in various Western European countries for client visits: Spain, Belgium, the Netherlands. The work took place between 9 AM and 8 PM. An alarm went off when the account was used to log in from a French city at 10 PM. These login actions indeed turned out to be invalid.

Using Microsoft Defender for Cloud and Sentinel

Sentinel is known as security information and event management (SIEM), available from the Microsoft Azure environment. Since last July, Sentinel has had a new version of a so-called data lake. With this data lake, you can consolidate all your security data. Data collectors create connections to log sources from various systems. These can be Microsoft (Azure) systems, but collectors are also available for third-party vendors, or they can be manually created for specific customer applications. This provides enriched input. This gives you a single source on which you can investigate and trigger (automated) response actions. This way, an alert from Entra ID about a login attempt from, for example, the United Kingdom, which as an isolated incident isn't necessarily suspicious, can be enriched with an alert from Exchange that a user clicked a link in a suspicious email. Combined, these are worth investigating.

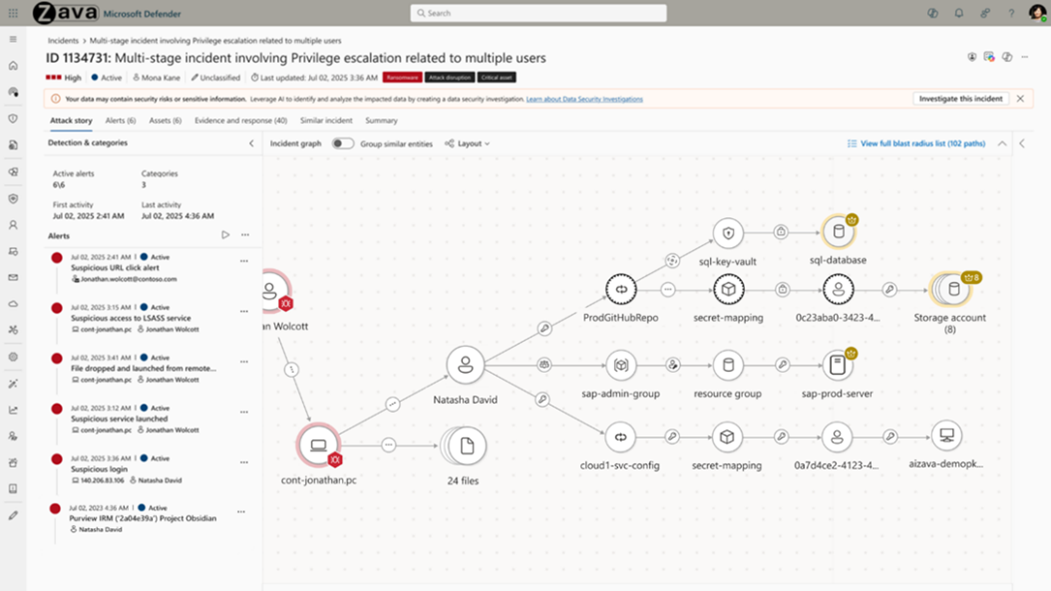

Since late September, Microsoft has released the Sentinel graph for public preview. With this tool, both security teams and AI can see connections, assess potential dangers, and act quickly, both before and after a security incident.

By combining the use of the data lake with Sentinel graph, security incidents can be enriched with a visual representation.

During a security incident, such a representation quickly provides insight into the path a malicious actor can take to move toward critical systems. The security team can use this information to mitigate the attack path, limiting the impact of a security incident.

Using AI within your organization

An AI system also learns from its mistakes. As described in the UEBA example, the system needs to learn what a valid user routine is. With a new system, this will certainly generate many false positives in the beginning. System specialists need to assist with this, and through feedback provided to the system, it will perform the analysis differently next time.

Final thoughts

Attacking teams generally have to deal with fewer management layers that all need to approve the use of a new tool. This allows them to quickly adapt to the latest developments and exploit the newest vulnerabilities. To provide solid resistance to this, your organization also needs to stay aware of the latest developments. Knowledge of these developments needs to be available across different specialist teams. The right processes need to be in place so quick action can be taken when the situation demands it.

The battle between attackers and defenders is a cat-and-mouse game where speed, intelligence, and adaptability are key. Only by deploying AI smartly and strategically can security teams gain the upper hand.

Why Argus IT

History

https://www.forbes.com/sites/bernardmarr/2023/05/19/a-short-history-of-chatgpt-how-we-got-to-where-we-are-today

https://en.wikipedia.org/wiki/Computing_Machinery_and_Intelligence

https://en.wikipedia.org/wiki/History_of_artificial_intelligence

Cybercrime in numbers

https://www.ncsc.nl/binaries/ncsc/documenten/publicaties/2024/oktober/28/csbn-2024-turbulente-tijden-onvoorziene-effecten/Cybersecuritybeeld+2024_turbulente+tijden%2C+onvoorziene+effecten.pdf

https://www.dutchitchannel.nl/news/590974/kosten-van-cyberaanvallen-blijven-stijgen

https://www.dutchitleaders.nl/news/713552/een-op-de-vijf-nederlandse-bedrijven-ondervond-in-2024-schade-door-cybercrime-

https://www.cbs.nl/nl-nl/longread/aanvullende-statistische-diensten/2025/cybersecuritymonitor-2024

https://www.knowbe4.com/hubfs/Insurance-Report-WhitePaper-2025-EN-US_F.pdf

https://sosafe-awareness.com/resources/reports/cybercrime-trends

Use of AI from a hacker perspective

https://www.microsoft.com/en-us/security/blog/2025/09/24/ai-vs-ai-detecting-an-ai-obfuscated-phishing-campaign

https://www.dutchitleaders.nl/news/713309/gebruik-generatieve-ai-op-de-werkvloer-neemt-toe

https://undetectable.ai/research/nl/ai-cybercriminaliteit-2025

AI from a blue team perspective

https://www.microsoft.com/en-us/security/blog/2025/07/07/learn-how-to-build-an-ai-powered-unified-soc-in-new-microsoft-e-book

https://www.microsoft.com/en-us/security/blog/2025/09/30/empowering-defenders-in-the-era-of-agentic-ai-with-microsoft-sentinel

https://www.fortinet.com/resources/cyberglossary/artificial-intelligence-in-cybersecurity

https://www.microsoft.com/en-us/security/business/security-101/what-is-user-entity-behavior-analytics-ueba

https://techcommunity.microsoft.com/blog/microsoft-security-blog/introducing-microsoft-sentinel-graph-public-preview/4456368

Using Microsoft Defender for Cloud and Sentinel

https://techcommunity.microsoft.com/blog/microsoft-security-blog/introducing-microsoft-sentinel-graph-public-preview/4456368

https://www.microsoft.com/en-us/security/blog/2025/09/30/empowering-defenders-in-the-era-of-agentic-ai-with-microsoft-sentinel

https://techcommunity.microsoft.com/blog/microsoft-security-blog/announcing-microsoft-sentinel-model-context-protocol-mcp-server-–-public-preview/4456405

https://www.microsoft.com/en-us/security/blog/2025/07/22/microsoft-sentinel-data-lake-unify-signals-cut-costs-and-power-agentic-ai